I, DRAWING ROBOT

Physical Computing, 1 week

VISUAL EXPRESSION THROUGH EYE MOVEMENT

Our experience empowers those with motor disabilities to express their vision on canvas. At the end of the experience, participants can take home their unique drawing capturing a one-of-a-kind experience.

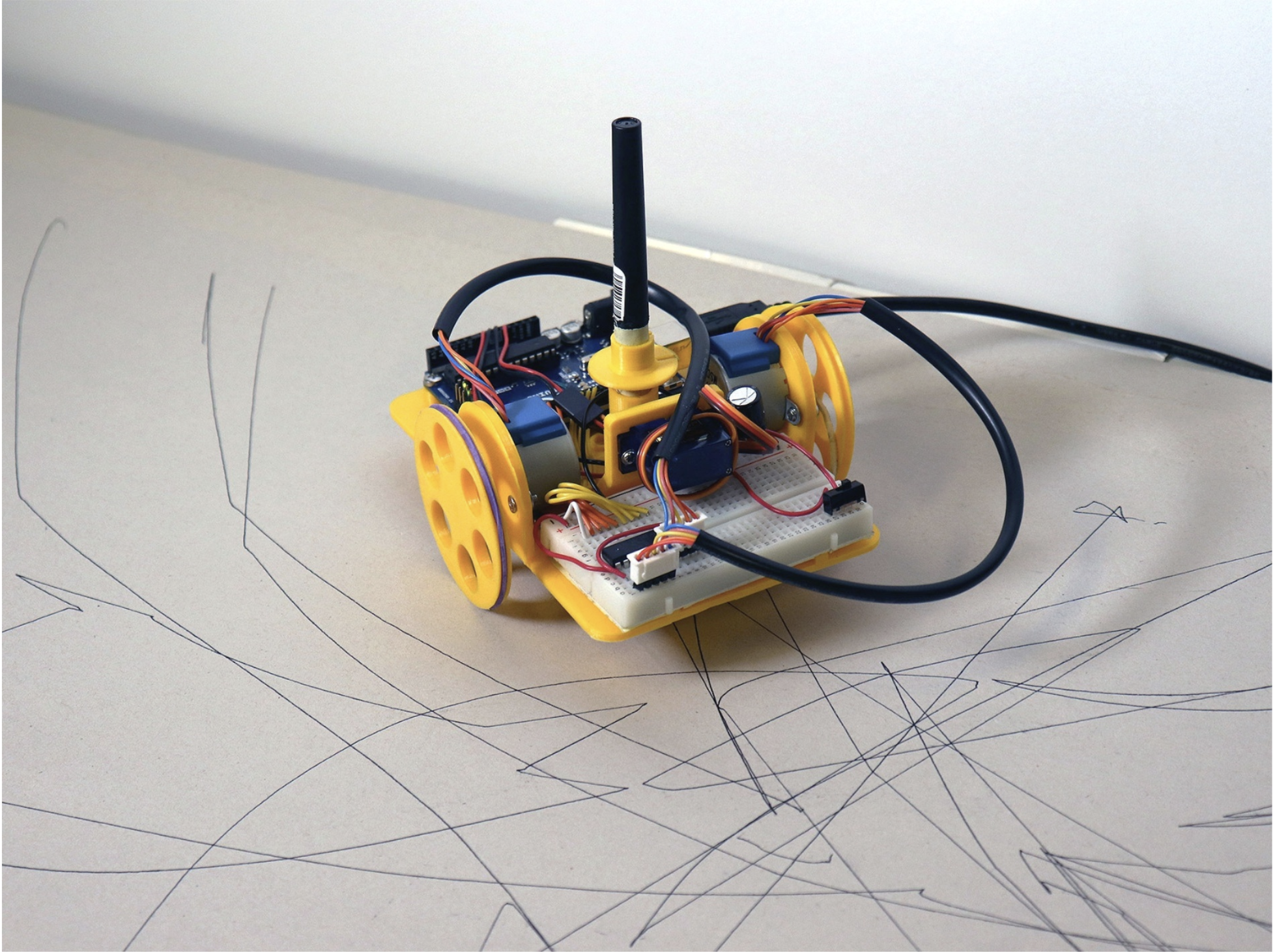

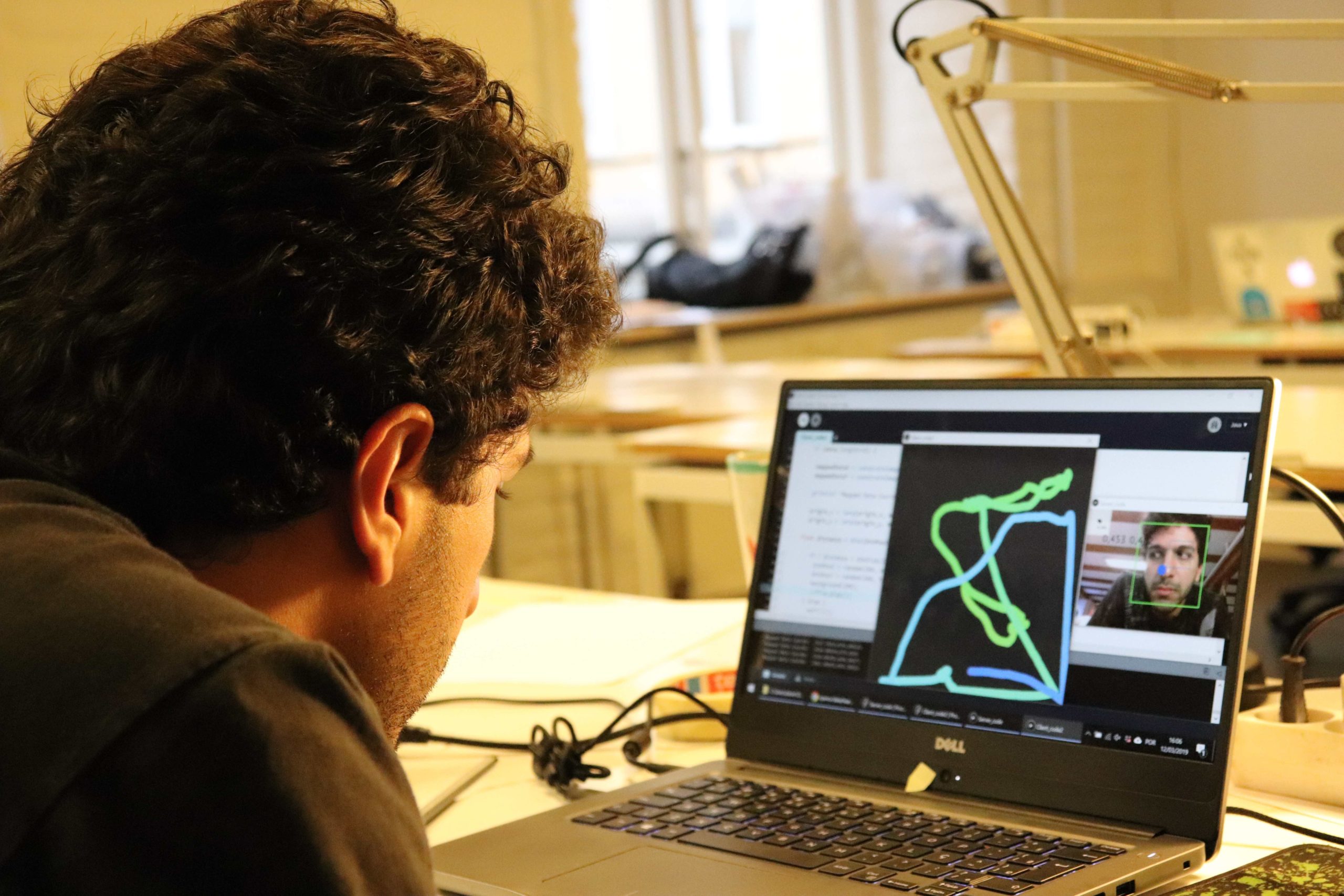

The OpenCV library was used in Processing to track eye movements, which were then mapped to cardinal directions within a Processing sketch. To interpret these directional instructions, signals are sent from Processing to two Arduinos, one to control the LEDs, another to ‘steer’ the drawing robot on canvas, creating abstract patterns.

Research

Concept Development

Systems Design

Video Editing

Research

Concept Development

Systems Design

Video Editing

Research

Concept Development

Systems Design

Video Editing

MY ROLE

MY ROLE

MY ROLE

MY ROLE

Concept Development

Prototyping

Videography

Research

Concept Development

Systems Design

Video Editing

Research

Concept Development

Systems Design

Video Editing

Research

Concept Development

Systems Design

Video Editing

TOOLS

TOOLS

TOOLS

TOOLS

Adobe Illustrator

Adobe Premiere Pro

Arduino

Fusion360

Processing

Adobe Photoshop

Adobe Premiere Pro

Arduino

Rihnocerous 3D

Adobe Photoshop

Adobe Premiere Pro

Arduino

Rihnocerous 3D

Adobe Photoshop

Adobe Premiere Pro

Arduino

Rihnocerous 3D

BRIEF

BRIEF

BRIEF

BRIEF

"Create a drawing machine that does not use a screen as means of expression and feedback."

"Create a drawing machine that does not use a screen as means of expression and feedback."

"Create a drawing machine that does not use a screen as means of expression and feedback."

"Create a drawing machine that does not use a screen as means of expression and feedback."

PROCESS

PROCESS

PROCESS

PROCESS

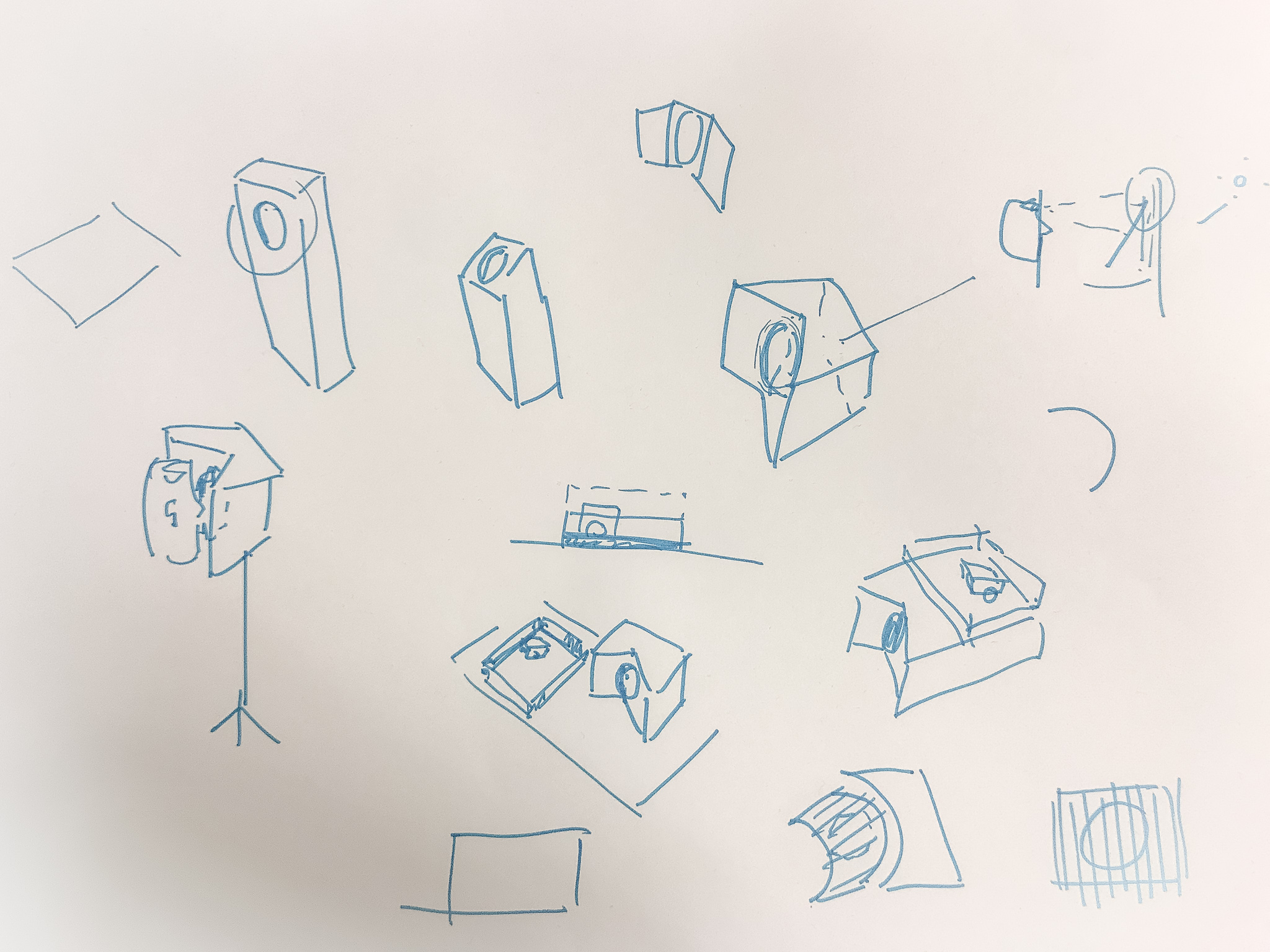

The design process involved concept development and rapid prototyping. Before developing a concept, we asked ourselves the question: who might use a drawing machine and why. Originally conceived as a serious tool to help those with severe motor handicaps to express themselves, this project bloomed into a greater, more inclusive experience that could serve a more general purpose, while not compromising on usability for its initial target group.

Regular feedback and iteration were crucial to the rapid prototyping phase. Based on previous learnings, we tested our ideas early on, both in terms of concept and feasibility (e.g., locating software that would help with eye tracking and exploring ways to convert eye tracking into drawing). We also coded and fabricated in parallel to optimize workflow. With user testing, our team discovered that users needed to know what their eye movement translated to without looking at the robot. This led us to develop the feedback lights.

The design process involved concept development and rapid prototyping. Before developing a concept, we asked ourselves the question: who might use a drawing machine and why. Originally conceived as a serious tool to help those with severe motor handicaps to express themselves, this project bloomed into a greater, more inclusive experience that could serve a more general purpose, while not compromising on usability for its initial target group.

Regular feedback and iteration were crucial to the rapid prototyping phase. Based on previous learnings, we tested our ideas early on, both in terms of concept and feasibility (e.g., locating software that would help with eye tracking and exploring ways to convert eye tracking into drawing). We also coded and fabricated in parallel to optimize workflow. With user testing, our team discovered that users needed to know what their eye movement translated to without looking at the robot. This led us to develop the feedback lights.

The design process involved concept development and rapid prototyping. Before developing a concept, we asked ourselves the question: who might use a drawing machine and why. Originally conceived as a serious tool to help those with severe motor handicaps to express themselves, this project bloomed into a greater, more inclusive experience that could serve a more general purpose, while not compromising on usability for its initial target group.

Regular feedback and iteration were crucial to the rapid prototyping phase. Based on previous learnings, we tested our ideas early on, both in terms of concept and feasibility (e.g., locating software that would help with eye tracking and exploring ways to convert eye tracking into drawing). We also coded and fabricated in parallel to optimize workflow. With user testing, our team discovered that users needed to know what their eye movement translated to without looking at the robot. This led us to develop the feedback lights.

The design process involved concept development and rapid prototyping. Before developing a concept, we asked ourselves the question: who might use a drawing machine and why. Originally conceived as a serious tool to help those with severe motor handicaps to express themselves, this project bloomed into a greater, more inclusive experience that could serve a more general purpose, while not compromising on usability for its initial target group.

Regular feedback and iteration were crucial to the rapid prototyping phase. Based on previous learnings, we tested our ideas early on, both in terms of concept and feasibility (e.g., locating software that would help with eye tracking and exploring ways to convert eye tracking into drawing). We also coded and fabricated in parallel to optimize workflow. With user testing, our team discovered that users needed to know what their eye movement translated to without looking at the robot. This led us to develop the feedback lights.

Low fidelity prototype of the drawing robot

Team brainstorming on opportunity areas

Low fidelity prototype of the drawing robot

Prototyping the user experience

Team brainstorming on opportunity areas

Prototyping the user experience

Initial sketches of the experience set up

Team brainstorming on opportunity areas

Initial sketches of the experience set up

Testing movements on the initial prototype

Interview to better understand people's charging habits

Testing movements on the initial prototype

3D printing the drawing robot

Interview to better understand people's charging habits

3D printing the drawing robot

Testing the eye tracking feature on Processing

Interview to better understand people's charging habits

Testing the eye tracking feature on Processing

LEARNINGS

LEARNINGS

LEARNINGS

LEARNINGS

LEARNINGS

work in parallel

As a team, we learned to test our ideas early on and to prototype and code in parallel. We regrouped often during the day to maintain effective communication. This arrangement helped us achieve a lot more with less time.

live communication between arduino & processing

In learning that Processing communicates at a much faster rate than the robot would respond, we calibrated the time it took for Processing to relay messages to the Arduino. This creates an instant feedback between the user’s eye movement and the robot’s drawing.

work in parallel

As a team, we learned to test our ideas early on and to prototype and code in parallel. We regrouped often during the day to maintain effective communication. This arrangement helped us achieve a lot more with less time.

live communication between arduino & processing

In learning that Processing communicates at a much faster rate than the robot would respond, we calibrated the time it took for Processing to relay messages to the Arduino. This creates an instant feedback between the user’s eye movement and the robot’s drawing.

work in parallel

As a team, we learned to test our ideas early on and to prototype and code in parallel. We regrouped often during the day to maintain effective communication. This arrangement helped us achieve a lot more with less time.

live communication between arduino & processing

In learning that Processing communicates at a much faster rate than the robot would respond, we calibrated the time it took for Processing to relay messages to the Arduino. This creates an instant feedback between the user’s eye movement and the robot’s drawing.

work in parallel

As a team, we learned to test our ideas early on and to prototype and code in parallel. We regrouped often during the day to maintain effective communication. This arrangement helped us achieve a lot more with less time.

live communication between arduino & processing

In learning that Processing communicates at a much faster rate than the robot would respond, we calibrated the time it took for Processing to relay messages to the Arduino. This creates an instant feedback between the user’s eye movement and the robot’s drawing.

work in parallel

As a team, we learned to test our ideas early on and to prototype and code in parallel. We regrouped often during the day to maintain effective communication. This arrangement helped us achieve a lot more with less time.

live communication between arduino & processing

In learning that Processing communicates at a much faster rate than the robot would respond, we calibrated the time it took for Processing to relay messages to the Arduino. This creates an instant feedback between the user’s eye movement and the robot’s drawing.